Der große Umbruch I & II

Eine Reise zu den Hotspots der KI-Forschung in Europa, den USA und China.

Maschinen herrschen…

Ausgerechnet inmitten der Weihnachtsferien, platziert die EU-Kommission die wohl wichtigste Zukunftsdebatte unserer Zeit.

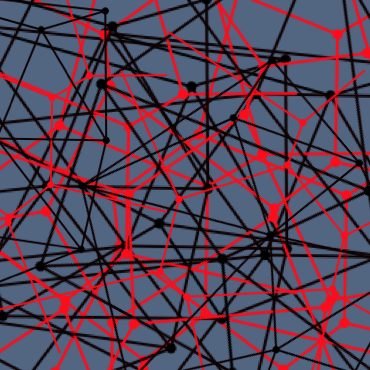

Nächste Ausfahrt Zukunft…

Unsere Kinder und Enkelkinder wachsen in einer spannenden Welt auf: In manchen Haushalten gesellen sich inzwischen Digitale Assistenten wie Amazons Alexa, Google Home oder der Spielzeugroboter Cozmo zum häuslichen Inventar....

ground facebook

Es war die gute alte Zeit: Das Kommunikationsspiel begann hierzulande vor elf Jahren, zunächst mit „SchülerVZ“ und StudiVZ“. Es war ein Pausenfüller für Schüler und Studenten. Doch dann verdrängte Facebook...

Fake news…

Ein Interview zum Thema fake news. Es löste eine bemerkenswerte Reaktion aus: Noch mehr fake news….

Integration…

….manche scheinen zu glauben, Integration heiße, einem Kenianer so lange Deutsch beizubringen, bis er blond und weißhäutig ist…..

Trailer Buch

Das folgende Video habe ich selbst erstellt. Es zeigt um welche Fragen sich das Buch dreht.